Better data infrastructure is needed for the AI era

Modern AI workloads are extremely data-intensive. We are talking not only about top-notch ventures like training a modern foundational LLM, which naturally requires an enormous corpus of data, but also about more downstream use cases such as building AI assistants, automating processes or providing new services. From a technical standpoint, these scenarios boil down to operations such as fine-tuning on a specific dataset, evaluating solution quality, or running batch inference for labelling or embedding generation.

For the last 10 years, the TractoAI team has been involved in building big data and AI infrastructure for various companies, including Nebius, Yandex and other business of different scales. Our key impression, comparing our Big Tech background with the current state of AI-era data tools, is that the latter are surprisingly not ready for scale. This post outlines several key challenges drawn from our experience. Some of them are addressed in our TractoAI product; for others, we have a vision for the future.

Data cross-usage

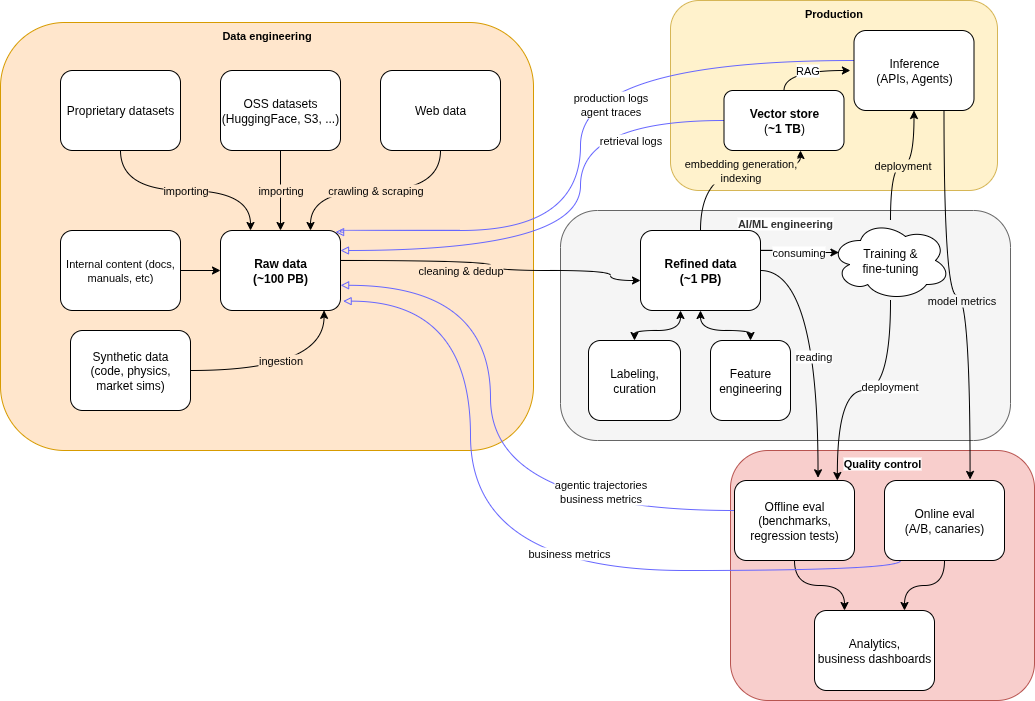

Nowadays, data flows are complex and have many cross-dependencies, ad-hoc steps, regular recalculations and streaming components. Let's imagine an abstract data lake of a modern AI company. It may include the following kinds of data:

-

Raw datasets crawled from the web or other sources.

-

Refined datasets that have been cleaned, filtered, enriched, derived from raw data, used for model training or fine-tuning.

-

Synthetic datasets generated by simulating agentic environments or world models, as an addition to scarce or costly-to-obtain real data.

-

Trajectories of agents, such as side artifacts obtained from the production deployment or synthetic evaluations used for reinforcement learning.

-

Offline and online evaluation results (metrics, logs) for model quality assessment, and sometimes even for retraining.

In advanced use cases, data flows are tightly coupled with together, creating a constant loop of data generation and feedback, as illustrated in the graphic below.

While looking nice and clean in theory, in practice implementing such a working "flywheel" introduces many challenges.

First, different parts of the data landscape often end up in different storages, forming unrelated namespaces. This complicates data reuse and requires extra effort to copy data back and forth. For example, raw data is often stored in object storages, production OLAP and OLTP data tends to reside in SQL databases, and streams of events are passed by using specialized event brokers. Moreover, ML frameworks frequently operate with a filesystem abstraction that brings network filestores to the table, or require complex FUSE-like translation layers on top of storages like S3 with a performance penalty.

It is frequently not possible to predict which data is going to be used where. For example, you may deploy an AI assistant or agent, and collect logs for quality assessment in an LLM observability tool. However, at some point you may decide that you need to add a sample of failed cases from your production to your training set. If production logs and your AI data lake live in different storage worlds, this simple task becomes an infrastructural challenge and may end up being postponed for a long time. By contrast, if all data remained in the same namespace, this would be as easy as granting necessary permissions to a user.

Second, the administrative overhead of managing various storages may be easily overlooked. What looks like a managed solution for the MVP scale may become complicated during the scaling phase. Plus, the more independent instruments are there, the more human effort is needed to maintain them. There is also a cost borne by the consumer: data scientists, analysts and ML engineers must explore data daily. A unified interface accessing all kinds of data enables faster data-driven decisions over all parts of the data flow, including the production, training and evaluation domains.

Finally, picking a proper solution that passes the scaling test may be tricky. There are a lot of pointwise offerings on the market, and they may work well at a small scale. Yet beyond petabytes of data, things get complicated — you may easily fall out of their target audience if they focus on having many smaller customers rather than bigger ones. Therefore, choosing the storage provider and compute instruments that guarantee scalability from the very beginning is a must.

To conclude this section, let me restate the main idea: making an AI data lake work well comes down to choosing scalable storage and compute that keeps all artifacts accessible in one place. With the right foundation chosen from the very beginning, data can flow smoothly between production, training and evaluation, enabling reuse without friction and allowing teams to focus on improving quality rather than wrestling with infrastructure.

Tables and transactional processing

Another surprising regression that we see in the world of AI data organization is the relatively low level of dataset structuring. Frequently, datasets are distributed in the form of a collection of files, which are at best JSON, CSV or Parquet files; but in the worst case, they are a collection of random files in different formats and structures — images, audio files, videos, etc.

The problem with unstructured datasets is that they are hard to operate with. When a data blob is tightly coupled with the metadata, you cannot efficiently operate on a metadata level without touching the data itself. This leads to unnecessary (and costly) IO overhead, amplified by the number of files. It is also challenging to enrich multimodal objects with new information, such as adding model-inferred labels/embeddings to a video, attaching a text transcription or storing multiple audio tracks for a single video. To mitigate this, people invent hacks such as storing metadata in separate files, or maintaining row-correlated datasets and joining them at read time.

When I imagine a flexible, but strict, representation of a dataset, it should meet the following properties:

-

On the outer level, the dataset is a table and the rows of this table correspond to independent records of the same kind, and the columns contain all the data that we have about the records — objects, labels, features, etc.

-

It should allow specifying schema for data, against which data is validated upon writing; the schema defines the column types.

-

The schema must cover standard primitive types (strings, numbers, Booleans), containers (lists, dictionaries, structs) and multi-modal data (images, audio, video, tensors).

-

To support unstructured data columns or nested fields, special data types like "blob" (general binary data), "json" (JSON without strict schema) or "any" (arbitrary data) are allowed.

Note that such a notion of a dataset is a natural extension of the traditional database table, but also covers AI-specific use cases. Let me highlight one particular aspect: it provides a foundation for working with multimodal data, which is a core part of the AI world in 2025.

An example of a dataset would look like:

| video: image | file_metadata: json | duration_sec: int | audio_tracks: dict<str, audio> | human_scenes: list<from_sec: int, to_sec: int> | human_embeddings: list<vector<1536, fp16>> |

|---|---|---|---|---|---|

| <video_1.mp4> | 120 | [{"from_sec": 0, "to_sec": 17}, {"from_sec": 30, "to_sec": 109}] | [[0.1, ...], [0.2, ...]] | ||

| <video_2.mp4> | 671 | [{"from_sec": 17, "to_sec": 119}] | [[0.3, ...]] |

Representing a dataset as a table and using it as an entry point to underlying objects also addresses another issue of unstructured data — weak consistency model. When a bunch of objects (e.g., images) are stored as files, and their metadata is kept separately, adding or removing objects from the dataset can easily break the downstream consumers. Again, in a world of classical data engineering, the approach for that issue is well known: transactionality.

In the model above, the dataset acts as a lightweight facade over underlying objects (whether they are files, parquet chunks or dataset-level metadata). It provides snapshot isolation for readers during the lifetime of the dataset, and lack of dirty state exposure during the distributed writing until the dataset is finally closed.

On a dataset level, it is also straightforward to implement versioning (a new version is a new dataset, probably reusing some of the previous data chunks) and lineage tracking (the origin of a dataset can be seen as a part of the dataset-level metadata).

Summing up the thoughts above, datasets can be seen as tables and allow transactional processing even in the renaissance of semi-structured and unstructured data.

In summary, modern AI, even amidst the renaissance of semi-structured and unstructured data, the most reliable path forward is to treat datasets as tables with schema and operate with them transactionally.

A remark on S3 and POSIX limitations. From our experience, complex cases of dataset processing require simultaneous work on several related datasets that cannot be merged, since they do not describe the same set of records, but separate universes, such as "cooking videos" + "recipes." Or "tricky coding agent prompts" + "agent trajectories." When you compare this with relational databases, in the world of OLTP, the main way of changing this kind of interconnected data is through transactions.

S3-compatible and POSIX-like storages lack an interface to perform an atomic operation on a set of objects or files. As a result, batch operations on interconnected datasets require client-side workarounds. This limitation is motivational for considering more powerful storage solutions with built-in transactional capabilities that allow staging changes across multiple objects within a single transaction and their atomic application. One example of such a solution is YTsaurus, which powers our TractoAI product.

However, we acknowledge that for many teams, S3/POSIX is already the default infra layer, so many ML engineers accept these workarounds as normal, even though they wouldn't be tolerated in OLTP systems. That contrast is striking.

Data exploration

A related topic is the ease of data exploration. Data accessibility is often limited by weak support for ad-hoc dataset inspection.

Much of the day-to-day work of ML engineers and data scientists is simply looking at the data: searching for biases, coming up with ideas for derived datasets or checking the quality of model outputs. To do this effectively, a specialist needs easy access to:

-

Basic statistics about the data: min/max/avg/length of columns, distributions of categorical values, and so on.

-

Ad-hoc querying without too much code, ideally through SQL or a similar familiar language.

-

Existing uses of the dataset, for example queries that reference it or notebooks that already rely on it, which often provide a faster start than beginning from scratch.

-

Interactive inspection of individual values in complex structured formats like JSON. Since raw JSONs are usually cluttered, reading them as a whole is not practical.

One benefit of keeping data structured at least on the outer level is that it guides a researcher from the very beginning by giving the impression of what the dataset actually contains. This makes exploration easier, both for individual work and for collaboration.

When it comes to the ease of data retrieval, systems address this problem in different ways. For example, Databricks uses Jupyter notebooks as the main entry point. Users write Spark code or SQL queries to generate and display dataframes. While Spark is flexible enough for accessing arbitrary slices of the dataset, the browsing experience in notebooks often feels limited.

On the other side, Hugging Face offers a dataset viewer. Its interactive abilities are quite good, but its querying and computation features are restricted. It usually works only on a small fraction of the data, around 5 GiB, while raw datasets standing early at the data preparation pipeline are frequently the biggest (sometimes hundreds of petabytes — or even more), and require the most eyeballing to decide how to make use of that massive data.

Also, as of today, most real data processing still happens outside Hugging Face because of its limited storage and lack of advanced transformation features — it is treated as a showcase place, not as a workbench. Therefore, the use of their dataset viewer is limited to applying only to the final artifacts of the pipeline. There is also no way for users to share their results of data exploration, as there is no bundled collaborative mode for SQL or notebooks.

Ideally, there should be a tool as interactive as the Hugging Face viewer, but operating inside a data lake. Something like an IDE for data science: a system that helps with data processing, provides business intelligence-like primitives for visualization and encourages collaboration by exposing the results of others' work. This system must track and visualize ad-hoc queries of data through SQL (which must be scalable well beyond a petabyte scale), Jupyter and other interfaces — ideally, data queries must reside persistently within the system, and constitute the part of the workbook that covers both the data and its applications.

Data processing workloads

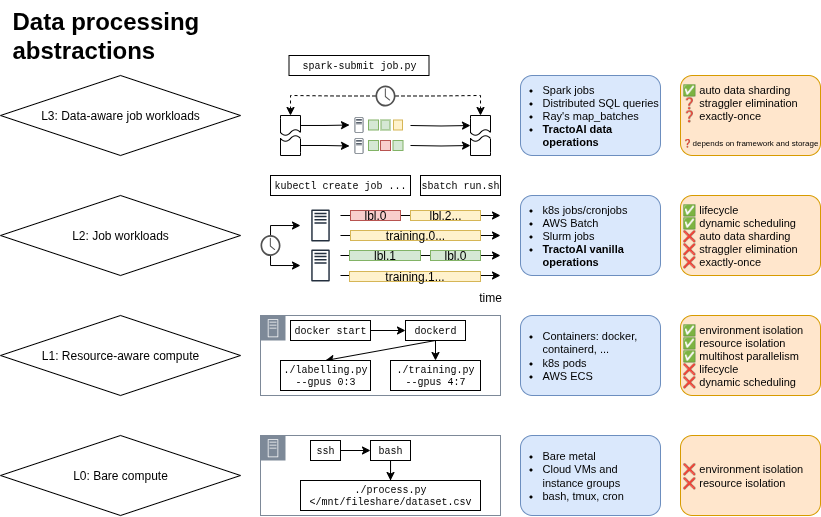

Consider several types of data processing workloads.

-

Query on structured datasets — Filtering, aggregation, SQL queries, etc.

-

Dataset transformation — For example, text tokenization across a 1 PiB web crawl or image resizing in a photo library.

-

Batch inference — For example, labelling data or calculating embeddings for a large dataset.

These workloads have a similar trait — they are horizontally scalable, meaning they may benefit from parallelization many hosts.

Yet, popular AI data processing approaches we have seen in the wild are surprisingly immature, especially when data processing runs on GPU compute. This likely stems from the constant capacity hunting (switching from one provider to another complicates infrastructure maintenance), and the relatively low cross-influence between the post-GenAI ML community and the Big Tech-bred data world. Moreover, many of the latest improvements in AI field arise from academia, where data engineering and data lakes are just not a thing, and running bash-scripts over Slurm (reaching L2 in terms of the diagram above) often represents the state of the art.

Take batch inference on GPUs, for example. Some teams we met used the simplest approach, of running a fixed number of vLLM processes, each statically assigned a dataset fraction, leading to uneven load distribution and hard manual orchestration. Others decoupled processing into server-side processes and pusher processes that query vLLM servers, requiring separately provisioning and complex load balancing. Inefficiency at this stage may easily lead to a low GPU utilization, and consequently wasted money on compute resources.

Simply put: raw compute orchestration frameworks don't help simplify data-intensive scenarios, since they don't know the details about what each process was doing. Where a data-aware scheduler could've taken an input of a failed worker and distributed the work across the remaining workers, the best data-agnostic scheduler would have to re-run the whole job.

Let's pause to take a look at the diagram below.

With this hierarchy in mind, we see what piece of a puzzle is lacking — when it comes to data processing in AI industry, we were thrown back to the lower level of abstraction, ending up on the L2 level of data-agnostically scaled workloads at most, while all the necessary means of powerful data processing were actually already invented.

Thanks to the famous MapReduce paper, it is now rare to see classic structured Big Data processing implemented as a deployment of server-side code. Instead, declarative approaches and powerful scheduling frameworks (e.g., Spark, Beam, Hive) handle provisioning and execution, allowing us to focus on data flow.

Imagine an abstract MapReduce system, in which a map operation can be run by a code like:

def expand_dialogue_mapper(row: dict):

turns = row["dialogue"].split("\n")

for i in range(0, len(turns)-1, 2):

user, bot = turns[i], turns[i+1]

yield {"prompt\": user, \"response\": bot}

# Transforms a dataset of dialogues into a dataset of Q&A pairs.

run_map(

expand_dialogue_mapper,

source_table="/home/max/dialogues",

destination_table="/home/max/qna_pairs")

As it is clear from the code, the scheduling framework is exposed to the inner logic of the processing — by choosing the map semantics of an operation, we tell the scheduler that workers process dataset rows independently, hence it can adjust the number of workers by subdividing processing chunks according to the actual performance. By the way, TractoAI does this automatically.

Moreover, this paradigm allows treating GPUs and batch inference as a straightforward case of the very same map operation. Indeed, on the computation semantics level, there is no difference between expand_dialogue_mapper defined above and StoryTellingMapper below:

class StoryTellingMapper:

# This is called once at the very beginning.

def start(self):

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model_id = 'roneneldan/TinyStories-1M'

tok_id = 'EleutherAI/gpt-neo-125M'

self.tokenizer = AutoTokenizer.from_pretrained(tok_id)

self.model = AutoModelForCausalLM.from_pretrained(model_id, torch_dtype=torch.float16)

self.model.to("cuda").eval()

# This is called for each row of input table.

def __call__(self, row: dict):

prompt = 'Once upon a time, there was a little ' + row['hero']

inputs = self.tokenizer(prompt, return_tensors='pt').to('cuda')

output_ids = self.model.generate(

**inputs,

max_new_tokens=150,

do_sample=True,

temperature=0.9,

top_p=0.95,

repetition_penalty=1.1,

pad_token_id=self.tokenizer.eos_token_id,

)

story = self.tokenizer.decode(output_ids[0], skip_special_tokens=True)

yield {'story': story}

Basically, anything that is callable can be seen as a mapper. The only requirement is that it should return a generator of rows, no matter what it does under the hood.

Another advantage of the data-aware approach related to the previous section is that the scheduler of a computation, knowing which jobs succeeded, is easily able to correctly collect the final dataset from the outputs of individual jobs. When implementing data processing by using data-agnostic orchestration, it may be tricky to handle job failures. A frequent approach in this case is simply to fail the whole computation and re-run it from the beginning. This leads to a lot of wasted work.

What's next?

These observations were made over time, not in one day, while listening to the internal and external clients of TractoAI, and trying various approaches in practice. In our product, we managed to implement some of the principles discussed and we continue working on other concepts — give it a try on our Playground, or in the self-service.

We are happy to discuss these topics and plan to expand on them in the next posts. Leave a comment, or talk to us via Discord if you are interested!