Modern Cloud AI StackRun, scale, and collaborate on AI and Data workloads on a unified compute platform.

Teams choosing to build with TractoAI

AI frontier is shifting towards data. Is your stack ready?General purpose AI models are quickly becoming commodities. The value moves towards proprietary data and custom models. Today’s tooling isn’t built for data-centric, personalized AI.

Introducing TractoAI Run entire ML workflow—data prep, training, and inference—in a single shared environment

Run entire ML workflow—data prep, training, and inference—in a single shared environment Handle all types of data—images, video, audio, text, and tabular—without switching tools

Handle all types of data—images, video, audio, text, and tabular—without switching tools Cut AI and data infrastructure costs vs. hyperscalers, with no performance compromise

Cut AI and data infrastructure costs vs. hyperscalers, with no performance compromise Access a large pool of CPUs and GPUs. Automatically scale resources up or down

Access a large pool of CPUs and GPUs. Automatically scale resources up or down No cluster setup. Run your jobs with serverless execution and usage-based pricing

No cluster setup. Run your jobs with serverless execution and usage-based pricing

Dedicated cluster for production workloadsConnect data and build custom AI workflows using built-in open source frameworks and scalable compute runtime. Interactive notebooks or SDKs, your choice.

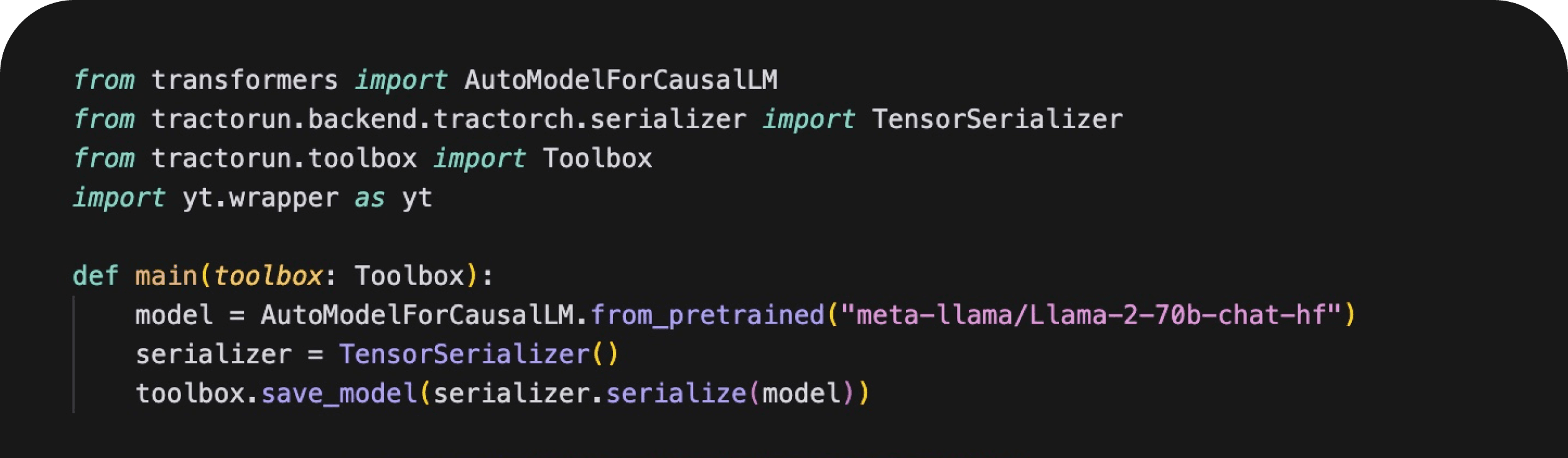

Fine-tune models on your data

Fine tune or distill popular open source models like DeepSeek, Llama or Flux. Experiment and tweak models at scale with dynamic compute.

Train your own AI models

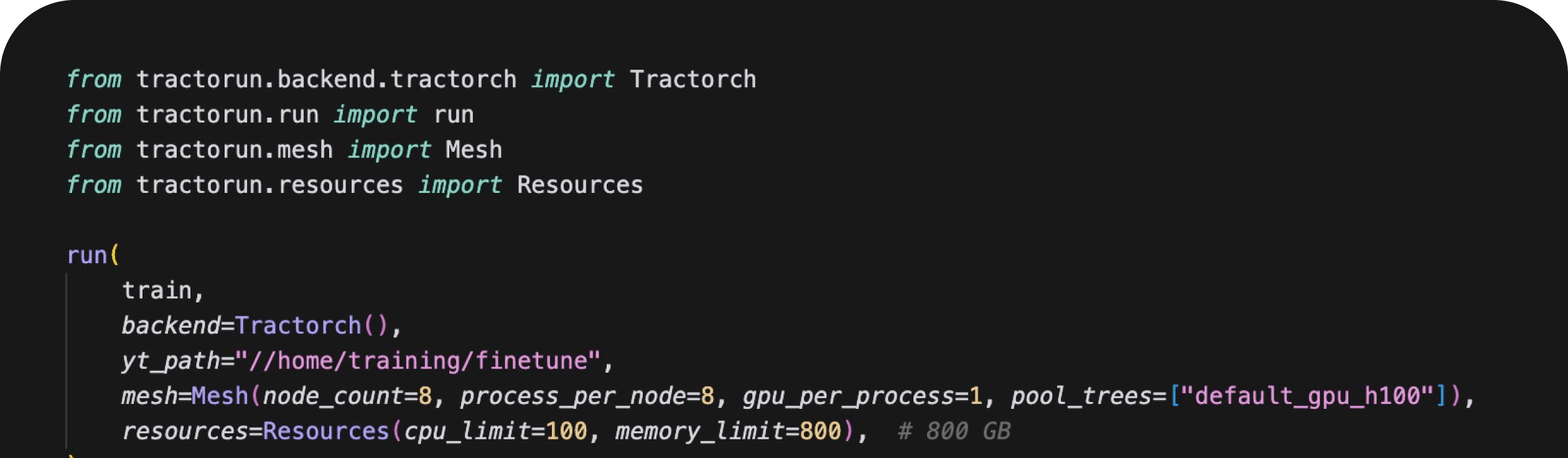

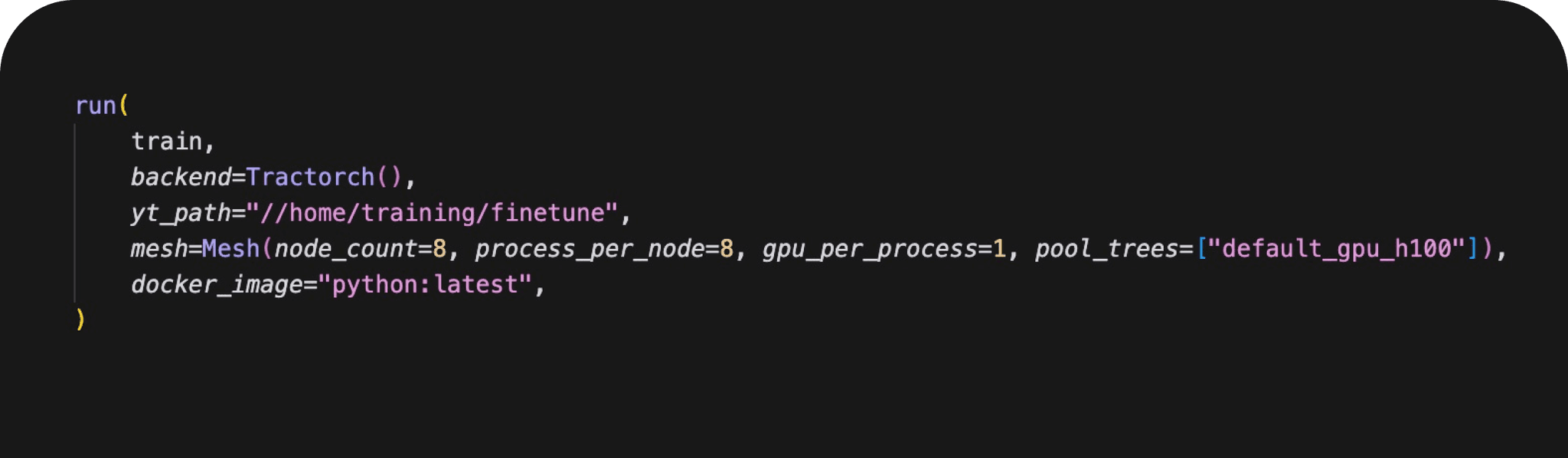

Build custom models with Tracto distributed training launcher. Support for all major libraries like PyTorch, Hugging Face, Nanogpt. Scale up to hundreds of GPUs in seconds, store checkpoints, and monitor results on W&B.

LLM batch inference

Run distributed batch inference jobs to increase throughput and utilize both CPUs and GPUs. Built-in support for popular inference libraries vllm, sglang.

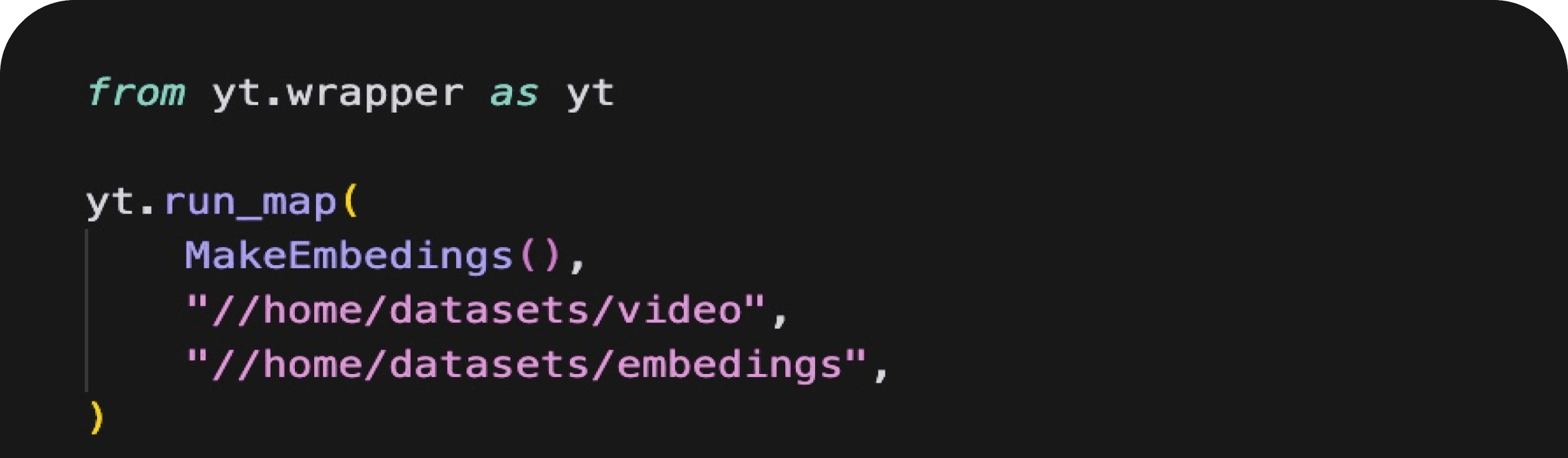

Multi modal data processing

Process structured and unstructured data including images, video, and audio. Synthetically generate data at scale for reinforcement learning.

Work with your favorite tools and save on integration work

Products and interfaces

Notebook

Workflows

Docker registry

Web Interface

SDK

Computations and data

SQL

Ray

Files

Structured metadata

MapReduce

AI Models

Dataset tables

Resource layer

Serverless GPU and CPU compute

Cypress distributed filesystem

Underlying infrastructure

Compute cloud

Bare metal racks

Scale individual workloads and ML platforms with framework friendly tools

Data scientists and ML researchers• Distribute AI workloads across multiple nodes w/o infra expertise• Leverage ML ecosystem with extensible integrations

Data & ML Platforms builders• Compute abstractions for creating scalable data & ML platforms• APIs to integrate with data and ML tools

MLOps & DevOps teams• Automatic job orchestration and scheduling• Autoscaling - scale to 100s of GPUs/CPUs

Ready for AI workloadsTracto automates deployment and scaling for tasks like ML training, exploratory analysis, and big data processing enabling teams to iterate faster

Prove research and bootstrap AI roadmap with TractoAI serverless