We are excited to introduce Tractorun, a powerful open-source tool for running distributed machine learning workloads.

Tractorun allows you to submit discrete jobs such as batch inference, model training, and reinforcement learning for execution on a cluster of GPUs.

What can you do with Tractorun?

Tractorun is ideal for launching any computational tasks that require or benefit from GPU acceleration. AI workloads in particular benefit from distributed computing to speed up their execution:

-

Training and fine-tuning models. Use Tractorun to train models across multiple compute nodes simply.

-

Offline batch inference. Run distributed inference to process millions of prompts.

-

Reinforcement learning tasks. Use Tractorun to scale and optimize reinforcement learning model training and evaluation.

For additional practical examples refer to the Github with solution notebooks.

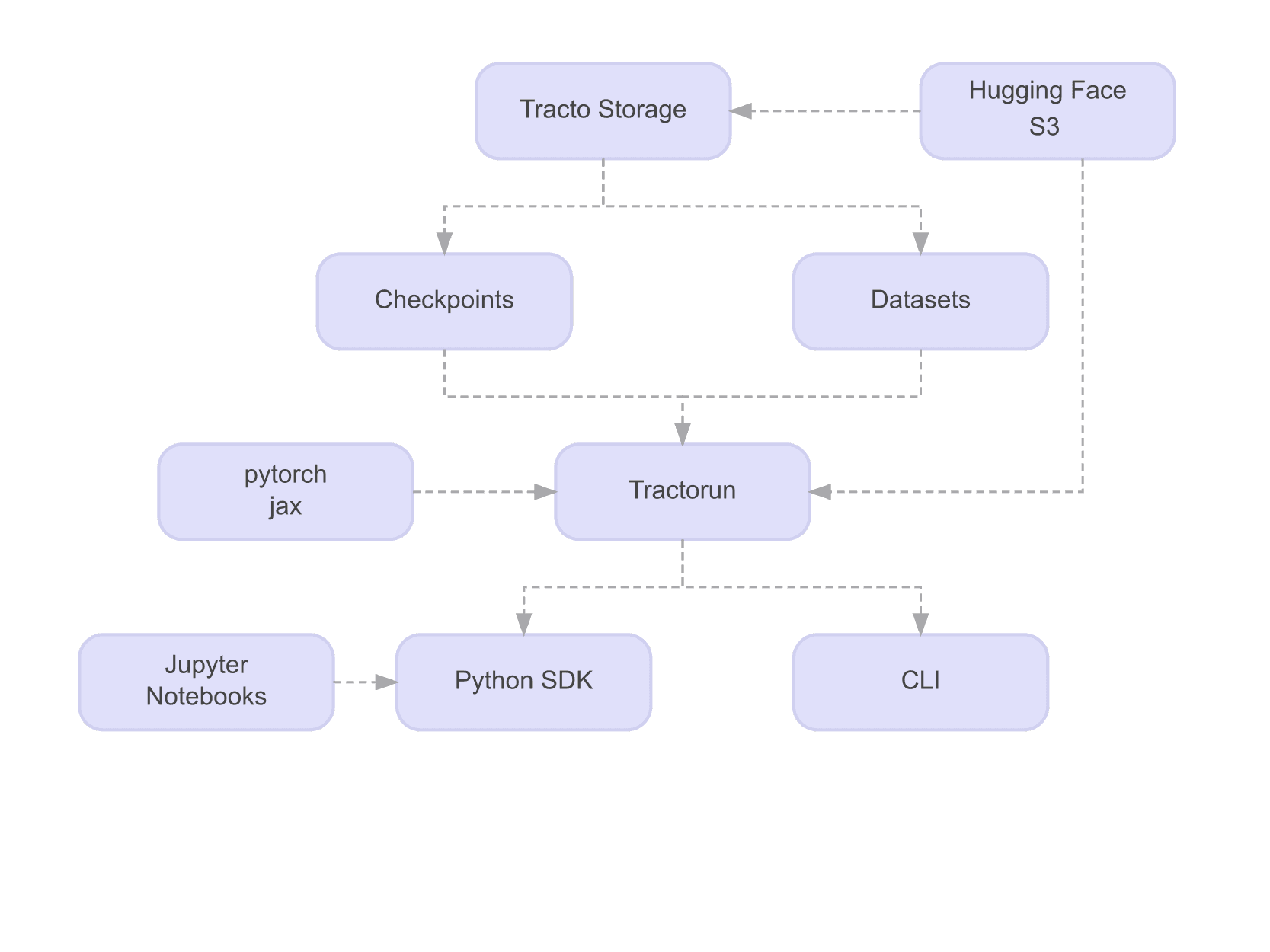

How does it work?

Submit a job using tractorun main.py and Tractorun will upload your job and run it on the TractoAI cloud. Built for production, Tractorun ensures scalable and reliable performance for your workloads. You can get started quickly without touching Kubernetes, Slurm, or configuring cloud service providers.

Tractorun offers two developer-friendly interfaces:

- Python SDK for Jupyter Notebooks and fast prototyping.

- CLI with YAML configuration files for production-ready workflows.

Why Tractorun?

Tractorun increases development velocity and offers production-grade enterprise features:

- Fully managed service. Tractorun coordinates the execution of distributed workloads, enabling AI and Data engineers to focus on coding scalable applications rather than infrastructure.

- Scalability. Easily scale workloads across multiple nodes

- Integrations. Supports a growing ecosystem of ML libraries (Pytorch, Jax, HF transformers) and inference frameworks (vllm, sglang).

- Monitoring and observability. Real-time visual dashboard to monitor and manage running jobs.

- Part of Tracto.ai. Store your data, and run Map/Reduce, Spark, ClickHouse and Tractorun jobs in unified pipelines.

Tractorun in practice

In this article, we’ll explore how to use Tractorun to fine-tune a TinyStories-3M model, enabling it to generate fairy tales about Tracto AI.

This step-by-step guide will cover:

- Bulk offline inference. Using the vllm and Llama-3.2-3B-Instruct model to generate a dataset and store it as a structured table in YTSaurus.

- Defining an effective PyTorch Dataset to interact with the YTSaurus table.

- Fine-Tuning the roneneldan/TinyStories-3M model. Running fine-tuning with the Hugging Face transformers library.

- Evaluating the fine-tuned model.

We are going to use tractorun python sdk in this example, but you can also use the CLI with YAML configuration files for production-ready workflows. Also simple example for cli can be found on Github.

The completed example is available on Github

Offline inference

In the first step, we'll use vLLM for batch inference Llama to generate a dataset of fairy tales. To do this, we'll:

- Implement prepare_dataset function that will be run on Tracto.ai platform to infer the model.

- Call this function using tractorun.run.

Here's how to set up the inference process:

import yt.wrapper as yt

from yt import type_info

from tractorun.toolbox import Toolbox

from tractorun.run import run

from tractorun.mesh import Mesh

from tractorun.resources import Resources

from tractorun.backend.generic import GenericBackend

from tractorun.backend.tractorch import Tractorch

TALES_PER_JOB = 500

MAX_TOKENS = 1000

# to use llama, you need to get access on page <https://huggingface.co/meta-llama/Llama-3.2-3B-Instruct>

# and provide your HF token

hf_token = os.environ.get("YT_SECURE_VAULT_HF_TOKEN", "") or os.environ.get("HF_TOKEN", "")

def prepare_dataset(toolbox: Toolbox):

os.environ["HF_TOKEN"] = hf_token

os.environ["CUDA_VISIBLE_DEVICES"] = str(toolbox.coordinator.get_process_index())

self_index = toolbox.coordinator.get_self_index()

table_path = f"{datasets_path}/dataset_{self_index}"

llm = LLM(model="meta-llama/Llama-3.2-3B-Instruct", seed=self_index,)

sampling_params = SamplingParams(

temperature=0.9,

top_p=0.85,

max_tokens=MAX_TOKENS,

)

conversations = [

[

{

"role": "system",

"content": "You are a professional storyteller. Write the story in one paragraph, without line breaks. A user will now ask you to tell a fairy tale, and you must create a story featuring Tracto.ai. Tracto.ai the a ai-startup that provides infrastructure for machine learning and big data processing."

},

{

"role": "user",

"content": f"Write the {index}th fairy tail about some animal please.",

},

] for index in range(TALES_PER_JOB)

]

results = llm.chat(

messages=conversations,

sampling_params=sampling_params,

)

toolbox.yt_client.create("table", table_path, attributes={"schema": schema.to_yson_type()}, force=True)

toolbox.yt_client.write_table(table_path, ({"text": result.outputs[0].text} for result in results))

# run this inference in parallel on 2 nodes with 8 h200 GPUs each

run(

prepare_dataset,

backend=GenericBackend(),

yt_path=f"{working_dir}/tractorun_inference",

mesh=Mesh(node_count=2, gpu_per_process=1, process_per_node=8, pool_trees=["gpu_h200"]),

resources=Resources(

cpu_limit=64,

memory_limit=322122547200,

),

)

Define YTDataset for PyTorch

Now that we have generated a dataset of fairy tales using bulk inference, we need to define a PyTorch dataset to efficiently load and process this data during training. This allows to stream data from YTSaurus and apply necessary transformations for the model training.

from tractorun.backend.tractorch import YtDataset

class YTTransform:

def __init__(self, tokenizer: AutoTokenizer):

self._tokenizer = tokenizer

def __call__(self, columns: list[str], row: dict) -> tuple:

assert columns == ["text"]

input_ids = self._tokenizer(yt.yson.get_bytes(row["text"]).decode(), padding="max_length", max_length=MAX_TOKENS)["input_ids"]

return {

"input_ids": input_ids,

}

# split table into train and eval datasets

def get_dataset(

path: str,

tokenizer: AutoTokenizer,

yt_client: yt.YtClient,

) -> tuple[YtDataset, YtDataset]:

start = 0

end = yt_client.get(path + "/@row_count")

train_end = int(end * 0.8)

eval_start = train_end + 1

train_dataset = YtDataset(path=path, yt_client=yt_client, transform=YTTransform(tokenizer), start=start, end=train_end, columns=["text"])

eval_dataset = YtDataset(path=path, yt_client=yt_client, transform=YTTransform(tokenizer), start=eval_start, end=end, columns=["text"])

return train_dataset, eval_dataset

Fine-tuning

Now that we have the dataset defined, we can fine-tune the roneneldan/TinyStories-3M model using the Hugging Face transformers library. To do this, we'll:

- Implement training function that will be run on Tracto.ai platform to train the model.

- Call this function using tractorun.run.

Here's how to set up the fine-tuning process on 2 nodes with data parallelism:

from tractorun.backend.tractorch.serializer import TensorSerializer

def training(toolbox: Toolbox):

model = AutoModelForCausalLM.from_pretrained(

"roneneldan/TinyStories-3M",

trust_remote_code=True,

use_cache=False,

)

tokenizer = AutoTokenizer.from_pretrained("EleutherAI/gpt-neo-125M")

tokenizer.pad_token = tokenizer.eos_token

data_collator = DataCollatorForLanguageModeling(tokenizer, pad_to_multiple_of=2, mlm=False)

train_dataset, eval_dataset = get_dataset(

path=dataset_path,

tokenizer=tokenizer,

yt_client=toolbox.yt_client,

)

args = TrainingArguments(

output_dir="/tmp/results",

per_device_train_batch_size=2,

gradient_accumulation_steps=1,

eval_on_start=True,

eval_strategy="epoch",

num_train_epochs=8,

weight_decay=0.1,

lr_scheduler_type="constant",

learning_rate=5e-5,

save_steps=0.0, # don't save checkpoints

logging_dir=None,

logging_strategy="epoch",

fp16=True,

push_to_hub=False,

batch_eval_metrics=False,

accelerator_config=AcceleratorConfig(

split_batches=True,

dispatch_batches=True,

),

)

args = args.set_dataloader(train_batch_size=16, drop_last=True)

trainer = Trainer(

model=model,

processing_class=tokenizer,

args=args,

data_collator=data_collator,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

)

trainer.train()

# save the model as an yt-file on the primary node

if toolbox.coordinator.is_primary():

toolbox.save_model(TensorSerializer().serialize(trainer.model))

run(

training,

backend=Tractorch(),

yt_path=f"{working_dir}/tractorun_training",

# run this training on 2 node with 8 h200 GPUs using data parallelism

mesh=Mesh(node_count=1, gpu_per_process=2, process_per_node=8, pool_trees=["gpu_h200"]),

resources=Resources(

cpu_limit=64,

memory_limit=322122547200,

),

)

Conclusions

To learn more about the Tracto.ai platform and get access to it, visit our website. You can find the example from this article and more on GitHub, such as:

- DeepSeek R1 batch offline inference.

- Fine-tuning LLaMA.

- Reinforcement learning with verl

- Training a model using MNIST dataset.

- and more.