Hi everyone!

I wanted to share a behind-the-scenes look at how Pleias, a private AI research lab out of France, partnered with us to train a fully open 1B language model.

So, what makes Pleias different? Well, for one, their models are built entirely on non-copyrighted data, which actually has never been done before in the foundational LLM space. But what really sets them apart is that their models are the first language models fully compliant with the EU AI Act. Pretty remarkable and sets a new standard for open and safe AI.

We met the Pleias team – Anastasia, Pierre-Carl, and Ivan – over our shared passion of making it easier for developers to build with AI.

The challenges training models before TractoAI

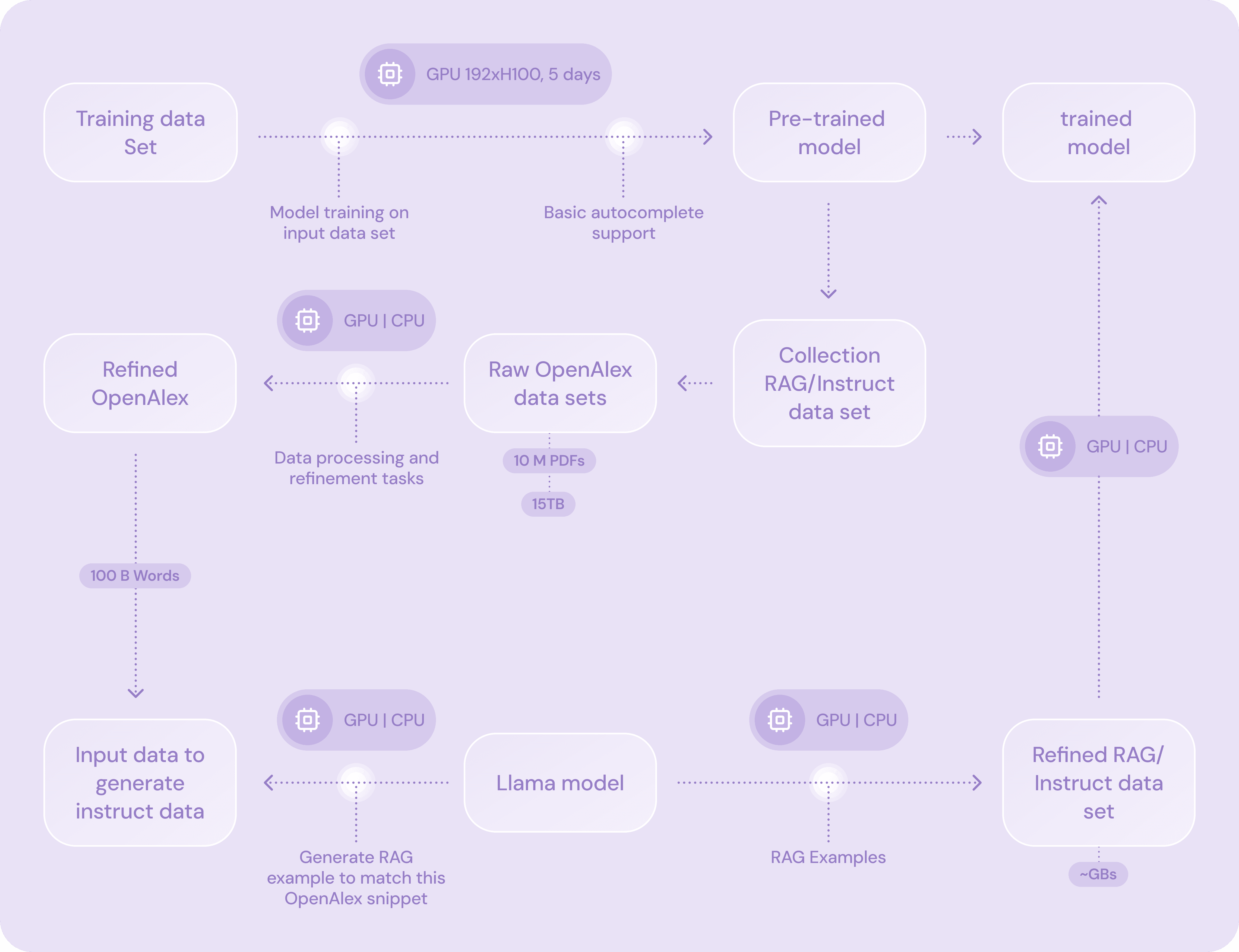

At a high level, data scientists and AI/ML engineers are quite familiar with the process of training a model. You start with raw data, process it through several steps, and create a working data set ready for training. From there, you use one of the main training libraries, like PyTorch, to handle the training process. Writing the training code is often an easy part, whereas running training jobs in parallel across a cluster of computers is more challenging.

Experience shows that creating a ready to use data set takes time and often requires multiple iterations.

The Pleias team was pulling data from 10M of PDFs to create their training set. On top of that, they augmented their original data set with thousands of synthetic RAG examples. It was a pretty elaborate data pipeline and processing workflow (more on this in a separate post).

Some of this data processing was lightweight, some needed a heavy CPU workload, and some needed a heavy GPU workload. All depending on the complexity of operations and the volume of data.

Part of the team had to take on MLOps and DevOps roles, spending significant time managing the data preparation and training infrastructure.

The Pleias team wanted to move fast without having to hire dedicated MLOps engineers. It became clear that their current AI stack was holding them back - it wasn’t flexible or reliable for what they needed.

Here’s what they shared with me:

- Scaling with traditional data pipeline tools is expensive. Working with large volumes of data is always messy. Now, imagine trying to build a high-quality dataset from terabytes of unstructured data, like PDFs. Want to bring more compute to speed up your workloads? Traditional tools like Airflow typically limit you to one type of compute instance per cluster, making it hard to add more GPUs and CPUs on demand.

- A need for dedicated MLOps expertise. Training a Gen AI model means orchestrating 100s of NVIDIA GPUs. Hardware failures caused constant interruptions, and Pleias data scientists spent hours troubleshooting to get training jobs back on track.

- Slow pace of iterations. The team often had to duplicate code and data across notebooks which slowed down progress due to duplication and versioning issues.

Making everything faster on TractoAI

After learning about Pleias experience we knew we could help streamline their workflows, making data preparation and model training faster, more reliable and far more cost-effective than hiring a dedicated MLOps platform team.

We quickly onboarded the Pleias team to the TractoAI platform and supported them every step of the way through our dedicated Slack channel (we do this for every team we work with), ensuring they were up and running in no time. Here’s how TractoAI helped the Pleias team:

Reliable training infrastructure. Pleias trained their 1B model in just five days using 192 H100 GPUs. They ran everything on Tractorun, our scheduling framework for distributed training. Tractorun monitors training jobs automatically fixing stuck or failed ones. For the Pleias team it meant more useful training time and less wasted GPU resources.

Smart scaling for data processing. Processing terabytes of unstructured data—think identifying languages, extracting text, and removing duplicates—was far from easy. With TractoAI, they set up tasks in minutes and picked CPUs or GPUs based on what each data processing operation needed.

“I think of TractoAI as replacing the work of 3 ML engineers that we would have to hire otherwise. Because how much it abstracts away the infra setup that we need to do model training.”

- Pierre-Carl Langlais, Co-founder at Pleias

Data preparation and model training pipeline

Built-in collaboration. As developers ourselves, we know how frustrating it is to work in silos. So, when we built TractoAI, we designed it with a “collaboration-first” mentality. For the Pleias team, this meant less duplicate work and better alignment across projects.

Iterations unlocked. At TractoAI, we’re all about integration—not just stitching together loose components but building a seamless system optimized for performance. Faster container execution times gave the team an opportunity to push through more experiments at a faster clip.

Keep building!